3 of 3: CI/CD the Easy Way Using AWS CodePipeline

This article is one of three (3) in a series:

1 of 3: CI/CD the Easy Way Using AWS CodePipeline

In part 1, you will use AWS CodePipeline to automate deployment of application changes.

2 of 3: CI/CD the Easy Way Using AWS CodePipeline

In part 2, you will use AWS CodePipeline to add a Testing stage to your pipeline.

3 of 3: CI/CD the Easy Way Using AWS CodePipeline

In part 3, you will use AWS CodePipeline to add Staging and Manual Approval stages to your pipeline. You will decommission the infrastructure once done, because this is only proof-of-concept.

For background on this series, go here:

CI/CD the Easy Way Using AWS CodePipeline | A Three-Part Series

1 of 16. [Cloud9] Provision Staging Environment using Terraform

Add 'staging' to `human-gov-infrastructure/terraform/variables.tf'. Apply the Terraform configuration.

human-gov-infrastructure/terraform/variables.tf

variable "states" {

description = "The list of state names"

default = ["california","florida", "staging"]

}

cd ~/environment/human-gov-infrastructure/terraform

terraform plan

terraform apply

2 of 16. [Cloud9] Provision Staging Environment: State (Kubernetes)

Create humangov-staging.yaml, adding the staging specific resource names [it's ok to template from humangov-california.yaml, but be sure to replace california with staging, and to include the staging-specific resource name for s3 bucket. Replace CONTAINER_IMAGE with the Image URI from the ECR repository. Deploy the HumanGov Staging Aplication

cd ~/environment/human-gov-application/src

cp humangov-california.yaml humangov-staging.yaml

~/environment/human-gov-application/src/humangov-staging.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: humangov-python-app-staging

spec:

replicas: 1

selector:

matchLabels:

app: humangov-python-app-staging

template:

metadata:

labels:

app: humangov-python-app-staging

spec:

serviceAccountName: humangov-pod-execution-role

containers:

- name: humangov-python-app-staging

image: public.ecr.aws/i7y0m4q9/humangov-app:7524d2cef9b983cf94d0d0d03392ad0039ff45ed

env:

- name: AWS_BUCKET

value: "humangov-staging-s3-wrd2"

- name: AWS_DYNAMODB_TABLE

value: "humangov-staging-dynamodb"

- name: AWS_REGION

value: "us-east-1"

- name: US_STATE

value: "staging"

---

apiVersion: v1

kind: Service

metadata:

name: humangov-python-app-service-staging

spec:

type: ClusterIP

selector:

app: humangov-python-app-staging

ports:

- protocol: TCP

port: 8000

targetPort: 8000

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: humangov-nginx-reverse-proxy-staging

spec:

replicas: 1

selector:

matchLabels:

app: humangov-nginx-reverse-proxy-staging

template:

metadata:

labels:

app: humangov-nginx-reverse-proxy-staging

spec:

containers:

- name: humangov-nginx-reverse-proxy-staging

image: nginx:alpine

ports:

- containerPort: 80

volumeMounts:

- name: humangov-nginx-config-staging-vol

mountPath: /etc/nginx/

volumes:

- name: humangov-nginx-config-staging-vol

configMap:

name: humangov-nginx-config-staging

---

apiVersion: v1

kind: Service

metadata:

name: humangov-nginx-service-staging

spec:

selector:

app: humangov-nginx-reverse-proxy-staging

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: ConfigMap

metadata:

name: humangov-nginx-config-staging

data:

nginx.conf: |

events {

worker_connections 1024;

}

http {

server {

listen 80;

location / {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://humangov-python-app-service-staging:8000; # App container

}

}

}

proxy_params: |

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

Apply the staging file

kubectl apply -f humangov-staging.yaml

3 of 16. [Cloud9] Provision Staging Environment: Ingress (Kubernetes)

Add 'staging' to humangov-ingress-all.yaml

~/environment/human-gov-application/src/humangov-ingress-all.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: humangov-python-app-ingress

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/group.name: frontend

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:us-east-1:502983865814:certificate/94775391-6fcd-42dd-83eb-bb338360575d

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

alb.ingress.kubernetes.io/ssl-redirect: '443'

labels:

app: humangov-python-app-ingress

spec:

ingressClassName: alb

rules:

- host: california.humangov-ll3.click

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: humangov-nginx-service-california

port:

number: 80

- host: florida.humangov-ll3.click

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: humangov-nginx-service-florida

port:

number: 80

- host: staging.humangov-ll3.click

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: humangov-nginx-service-staging

port:

number: 80

Deploy the ingress change

kubectl apply -f humangov-ingress-all.yaml

4 of 16. [Route 53] Provision Staging Environment: DNS (Route 53)

Add the Route 53 DNS entry for the Staging environment

Route 53 -/- [Hosted zones]

[humangov-ll3.click]

[Create record]

Record name: staging

Alias

Route traffic to:

Choose endpoint: Alias to Application and Classic Load Balancer

Choose Region: US East (N. Virginia)

Choose load balancer: The one you created earlier for california/florida.

[Create records]

5 of 16. [Browser] Provision Staging Environment: Test the application

Go to the URL: https://staging.humangov-ll3.click

6 of 16. [CodePipeline] Add 'HumanGovDeployToStaging' stage to Code Pipeline

AWS CodePipeline -/- Pipelines -/- [human-gov-cicd-pipeline]

[Edit]

Add a new stage AFTER 'HumanGovTest' and BEFORE HumanGovDeployToProduction

Stage name: HumanGovDeployToStaging

[Add stage]

[Add action group]

Action name: HumanGovDeployToStaging

Action provider: AWS CodeBuild

Region: US East (N. Virginia)

Input artifacts: BuildArtifact

Project name: [Create project]

Project name: HumanGovDeployToStaging

Environment image: Managed image

Compute: EC2

Operating system: Amazon Linux

Runtime(s): Standard

Image: aws/codebuild/amazonlinux2-x86_64-standard:4.0

Service role: New service role

Role name: autogenerated

Additional Configuration:

Privileged: Enable this flag if you want to build Doocker images or want your builds to get elevated privileges

VPC: ekscl-cluster VPC

Subnets: the two private subnets in that VPC

Security groups: eks-cluster-sg-...

[Validate VPC Settings]

Environment variables:

Name: AWS_ACCESS_KEY_ID Value: XXXXXXXXX

Name: AWS_SECRET_ACCESS_KEY Value: YYYYYYYYYYYYYYYYYYYYYY

Buildspec:

Build specifications: Insert build comamnds

[Switch to editor]

Add the contents of the below 'buildspec.yml"

[Continue to CodePipeline]

Project name: HumanGovDeployToStaging

[Done]

[Save]

buildspec.yml

version: 0.2

phases:

install:

runtime-versions:

docker: 20

commands:

- curl -o kubectl https://amazon-eks.s3.us-west-2.amazonaws.com/1.18.9/2020-11-02/bin/linux/amd64/kubectl

- chmod +x ./kubectl

- mv ./kubectl /usr/local/bin

- kubectl version --short --client

post_build:

commands:

- aws eks update-kubeconfig --region $AWS_DEFAULT_REGION --name humangov-cluster

- kubectl get nodes

- ls

- cd src

- IMAGE_URI=$(jq -r '.[0].imageUri' imagedefinitions.json)

- echo $IMAGE_URI

- sed -i "s|CONTAINER_IMAGE|$IMAGE_URI|g" humangov-staging.yaml

- kubectl apply -f humangov-staging.yaml

7 of 16. [CodePipeline] Add 'manual approval' stage.

This will be AFTER the 'HumanGovDeployToStaging' stage AND before the 'HumanGovDeployToProduction' stage. This will change the pipeline from continuous deployment to continuous delivery. Note: It is possible to configure notificatons, so that whenever a manual approval is pending, you get notified.

AWS CodePipeline -/- Pipelines -/- [human-gov-cicd-pipeline]

[Edit]

[Add stage]

Stage name: ManualApproval

[Add Action group]

Action name: ManualApproval

Action provider: Manual approval

[Done]

[Save]

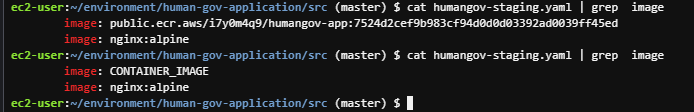

8 of 16. [Cloud9] Update image URI in 'humangov-staging.yaml'

Use the placeholder 'CONTAINER_IMAGE' in place of the uri of the latest image.

apiVersion: apps/v1

kind: Deployment

metadata:

name: humangov-python-app-staging

spec:

replicas: 1

selector:

matchLabels:

app: humangov-python-app-staging

template:

metadata:

labels:

app: humangov-python-app-staging

spec:

serviceAccountName: humangov-pod-execution-role

containers:

- name: humangov-python-app-staging

image: CONTAINER_IMAGE

env:

- name: AWS_BUCKET

value: "humangov-staging-s3-wrd2"

- name: AWS_DYNAMODB_TABLE

value: "humangov-staging-dynamodb"

- name: AWS_REGION

value: "us-east-1"

- name: US_STATE

value: "staging"

---

apiVersion: v1

kind: Service

metadata:

name: humangov-python-app-service-staging

spec:

type: ClusterIP

selector:

app: humangov-python-app-staging

ports:

- protocol: TCP

port: 8000

targetPort: 8000

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: humangov-nginx-reverse-proxy-staging

spec:

replicas: 1

selector:

matchLabels:

app: humangov-nginx-reverse-proxy-staging

template:

metadata:

labels:

app: humangov-nginx-reverse-proxy-staging

spec:

containers:

- name: humangov-nginx-reverse-proxy-staging

image: nginx:alpine

ports:

- containerPort: 80

volumeMounts:

- name: humangov-nginx-config-staging-vol

mountPath: /etc/nginx/

volumes:

- name: humangov-nginx-config-staging-vol

configMap:

name: humangov-nginx-config-staging

---

apiVersion: v1

kind: Service

metadata:

name: humangov-nginx-service-staging

spec:

selector:

app: humangov-nginx-reverse-proxy-staging

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: ConfigMap

metadata:

name: humangov-nginx-config-staging

data:

nginx.conf: |

events {

worker_connections 1024;

}

http {

server {

listen 80;

location / {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://humangov-python-app-service-staging:8000; # App container

}

}

}

proxy_params: |

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

9 of 16. [Cloud9] Modify the application to test the process.

Change the 'HumanGov SaaS Application' back to 'HumanGov'. After that, commit and push the changes.

git status

git add -A

git status

git commit -m "changed home page -- REMOVED 'SaaS Application'"

git push

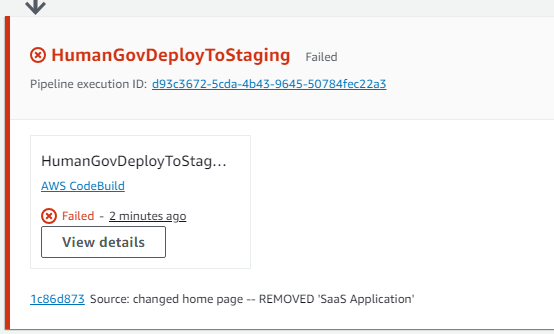

10 of 16. [CodePipeline] HumanGovDeployToStaging Failed.

Checking the logs, it appears that it cannot find the file humangov-staging.yaml in the container. It appears that the earlier 'Build' step when we listed what files got copied over, that list DID NOT include the humangov-staging.yaml.

11 of 16. [CodePipeline] Update the 'HumanGovBuild' stage

While it would be simpler to just say 'copy all the files', in this case, will just add that specific file to the list.

AWS CodePipeline -/- Pipelines -/- [human-gov-cicd-pipeline]

[Edit]

Edit: Build -/- AWS CodeBuild

HumanGovBuild [Edit]

Update the Buildspec, adding to the list of files:

- src/humangov-staging.yaml

[Update project]

12 of 16. [CodePipeline] Try the Pipeline again

'Release Change' to try it again.

AWS CodePipeline -/- Pipelines -/- [human-gov-cicd-pipeline]

[Release change]

[Release]

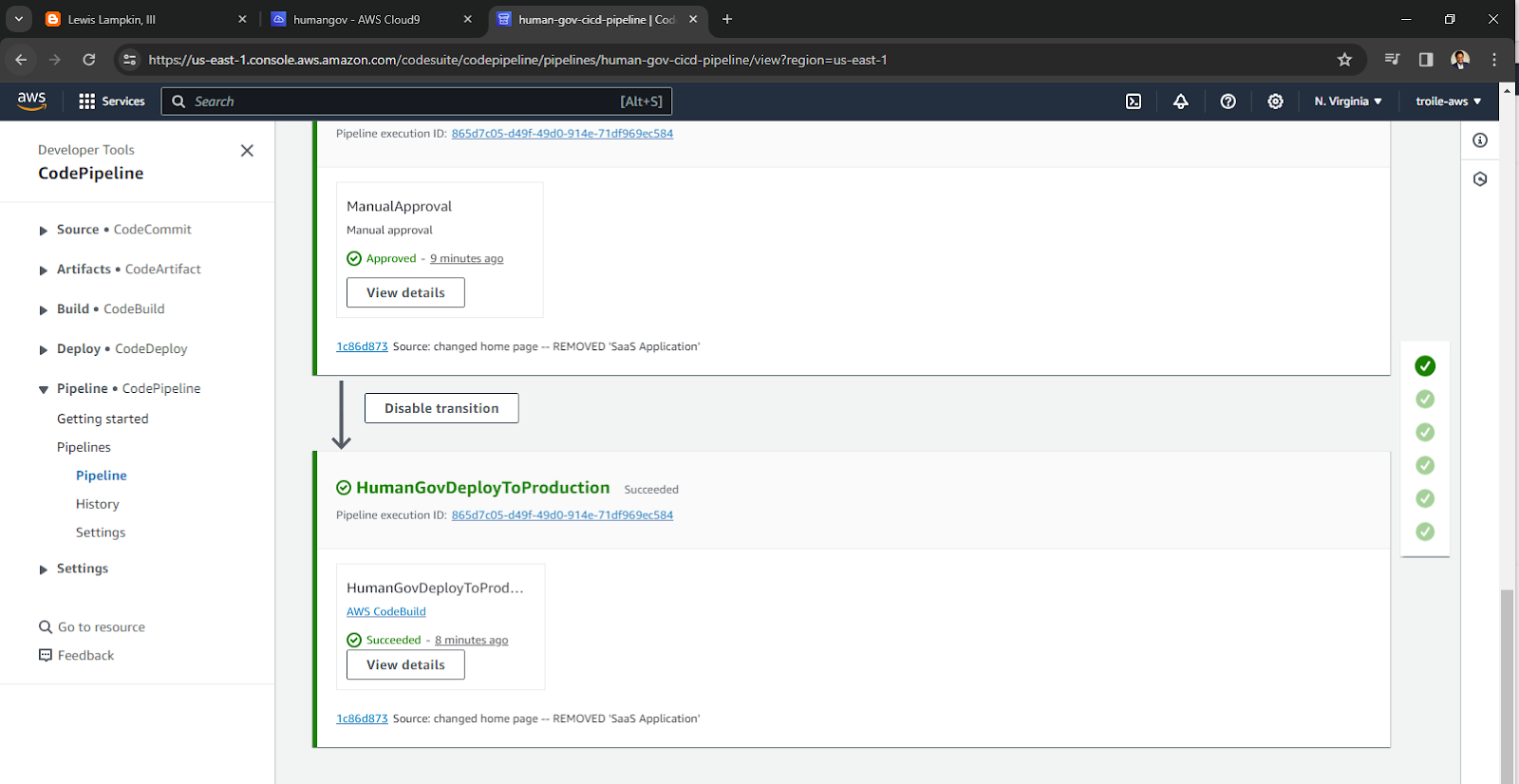

13 of 16. [CodePipeline] Perform Manual Approval

In this case, the change applies to staging, but it does not apply to the other sites. You can use staging to preview the change, and then decide if the change is permissible to production.

Compare https://staging.humangov-ll3.click to https://california.humangov-ll3.click and https://florida.humangov-ll3.click. If it all looks, acceptable, then approve the change.

- Review the staging site, versus the other production sites.

- Determine that the change is acceptable.

[Review]

[Approve]

Add comments

[Submit]

14 of 16. [Browser] Check the websites

15 of 16. [CodePipeline] Review the Pipeline

16 of 16. [CodePipeline / CodeBuild / Cloud9] Cleaning Up the environment

Here's a checklist. Read it carefully! Some things you might want to keep.

# 1. Delete CodePipeline

AWS CodePipeline -/- Pipelines -/- select 'human-gov-cicd-pipeline'

[Delete pipeline]

# 2. Delete Build Projects

CodeBuild -/- Build projects -/- select 'HumanGovBuild' [Delete]

-/- select 'HumanGovDeployToStaging' [Delete]

-/- select 'HumanGovTest' [Delete]

-/- select 'HumanGovDeployToProduction' [Delete]

# 3. Delete Kubernetes Ingress

kubectl delete -f humangov-ingress-all.yaml

# 4. Delete Kubernetes State Resources

kubectl delete -f humangov-california.yaml

kubectl delete -f humangov-florida.yaml

kubectl delete -f humangov-staging.yaml

# 5. delete eks cluster

eksctl delete cluster --name humangov-cluster --region us-east-1

# 6. Check the console, validate the above resources have been deleted

#7. Deactivate eks-user access keys

# Note: this is 'inactive' NOT delete

IAM -/- Access management -/- [users]

[eks-user]

[Security credentials]

Access keys -/- Actions -/- [Deactivate]

#8. Change Cloud9 back to Managed Credentials

Cloud9 -/- Preferences -/- AWS Settings -/- [Credentials]

AWS managed temporary credentials [Enable]

#9. STOP your cloud9 instance

# note: do not terminate it

#10. KEEP KEEP KEEP KEEP -- DO NOT DELETE: DynamoDB, S3, ECR, Route 53 Hosted Zone, and Hosted Domain.

Keep these resources. Will be used in the future.

References

Working with hosted zones - Amazon Route 53

Calling AWS services from an environment in AWS Cloud9 - AWS Cloud9

Continuous Integration Service - AWS CodeBuild - AWS

CI/CD Pipeline - AWS CodePipeline - AWS

Managing access keys for IAM users - AWS Identity and Access Management

Delete the EKSCTL Cluster :: Amazon EKS Workshop

Git - git-commit Documentation

Git - git-status Documentation

kubectl Quick Reference | Kubernetes

Command: apply | Terraform | HashiCorp Developer

Command: plan | Terraform | HashiCorp Developer

Comments

Post a Comment